Tags:

Web accessibility is an incredibly important topic, and should be considered as essential as subjects like security, UX (user experience), and design. Not only can poor accessibility cause usability problems for some people, but it can act as a complete barrier to entry. Your audience may be left feeling that you don't care about them, leading to them go elsewhere, to your competitors (be that in information, a product, or a service.)

The best way to know where to start with improving the accessibility of your website is to perform an audit.

Contents

Preparing for the Audit

There are several key things you need to do before you start an accessibility audit on your website:

- Define the scope of the audit. Will you be testing every single page, or a few key sample pages? If you site is large but runs on several key templates, it probably only makes sense to pick a few things to test. However, if each page is a bespoke piece, maybe you need to extend the audit to cover all the pages.

- What tools and methods will you be using to test? Automated tools are nice because you can perform consistent tests and get objective results, but they don't catch some things that people can find. For example, the accessibility tools in Lighthouse are very basic, and fall short of what you can find in the Firefox accessibility inspector. The Fx (Firefox) tools are again outstripped by more dedicated tools like the axe dev tools or those found in Wave extension. Ideally, your chosen approach should involve both automated and manual checks, similar to following a QA (Quality Assurance) process.

- If you are performing manual testing, you will also need an agreed upon methodology to perform those tests, to ensure that they you can repeat them as closely as possible to ensure fair testing across the website, and also to help track the affect of any changes made in light of the first audit.

- An agreed format to record the results. This is especially important when the testing is a mix of manual and automated, and where there are multiple people performing the audit. This can be as simple as a spreadsheet using rows for pages/sections under test, and columns for specific tests. I have an example accessibility audit spreadsheet template which you may copy, alter, and use as you wish for performing your own audits.

Automated Testing

The automated testing can be as simple as running a tool or extension directly in the browser, or you could run something like Pa11y to perform a series of checks on multiple web pages inside of a headless browser.

Bear in mind that automated testing cannot cover the whole spectrum of accessibility issues that might arise. For example, images with bad alt text are incredibly difficult to detect, but can cause difficulties for people when the text is presented instead of an image.

However, automated tests are still a vital part of your accessibility testing strategy. They can cover basic and easy to find issues, which might be time consuming if performed manually.

What kind of things should you test for automatically? Anything and everything you can:

- Well-formed document structure, such as correctly ordered headings, duplicate `id` attributes on multiple elements, etc.

- Colour contrast for text and interactive elements.

- Some checks for keyboard event handling for elements that have pointer events.

- Redundant `alt` text used on images, such as "Image of..." or "Picture of..."

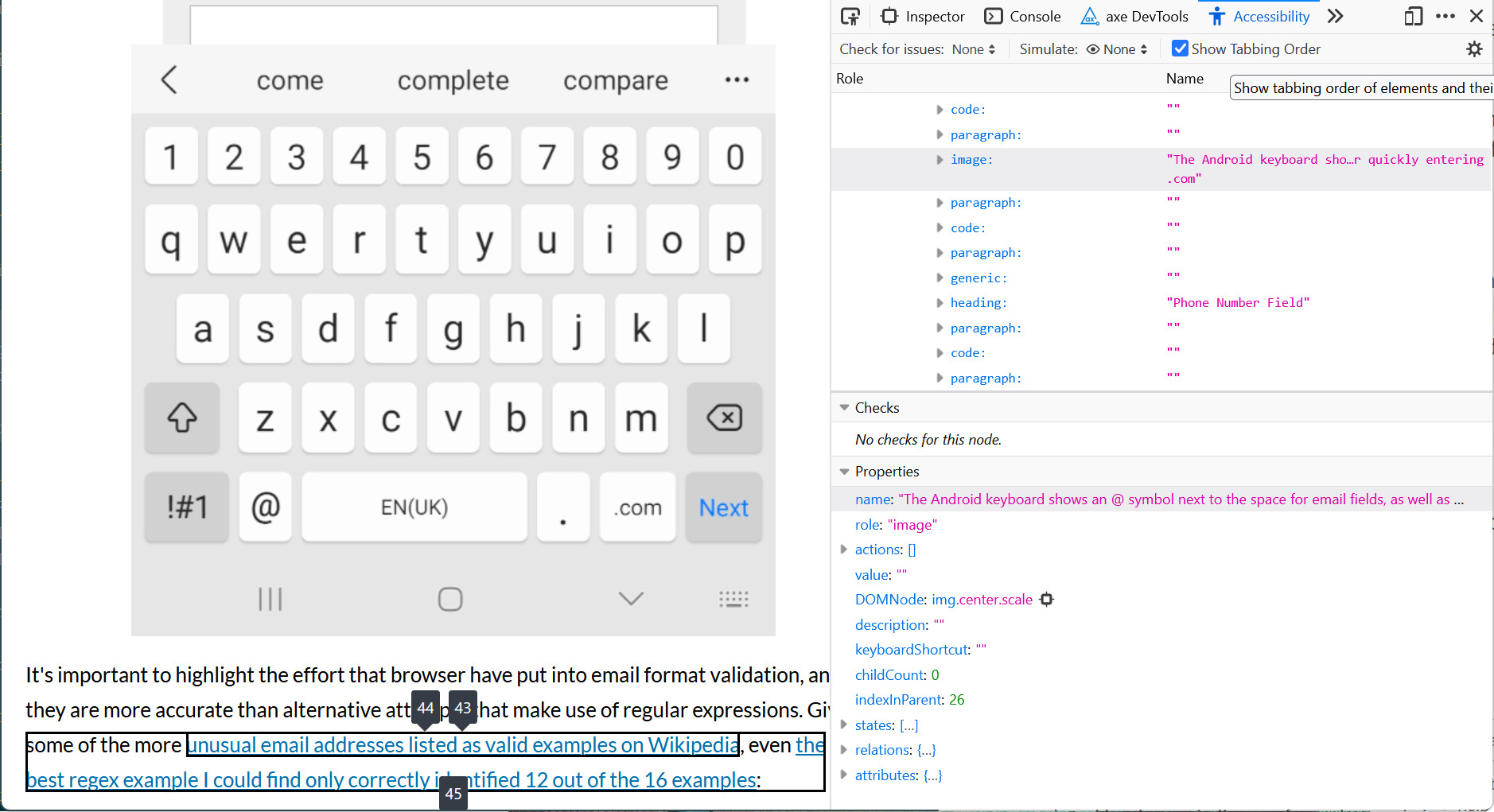

Firefox Accessibility Inspector

Of all the accessibility tools built into a browser, those found in Firefox are the best, giving a nice clear view of the accessibility tree and all associated details (including state) for the selected element. It also has the ability to perform basic checks on colour contrast, keyboard access, and text labels, colour blindness simulators, and the ability to visibly indicate the document tabbing order.

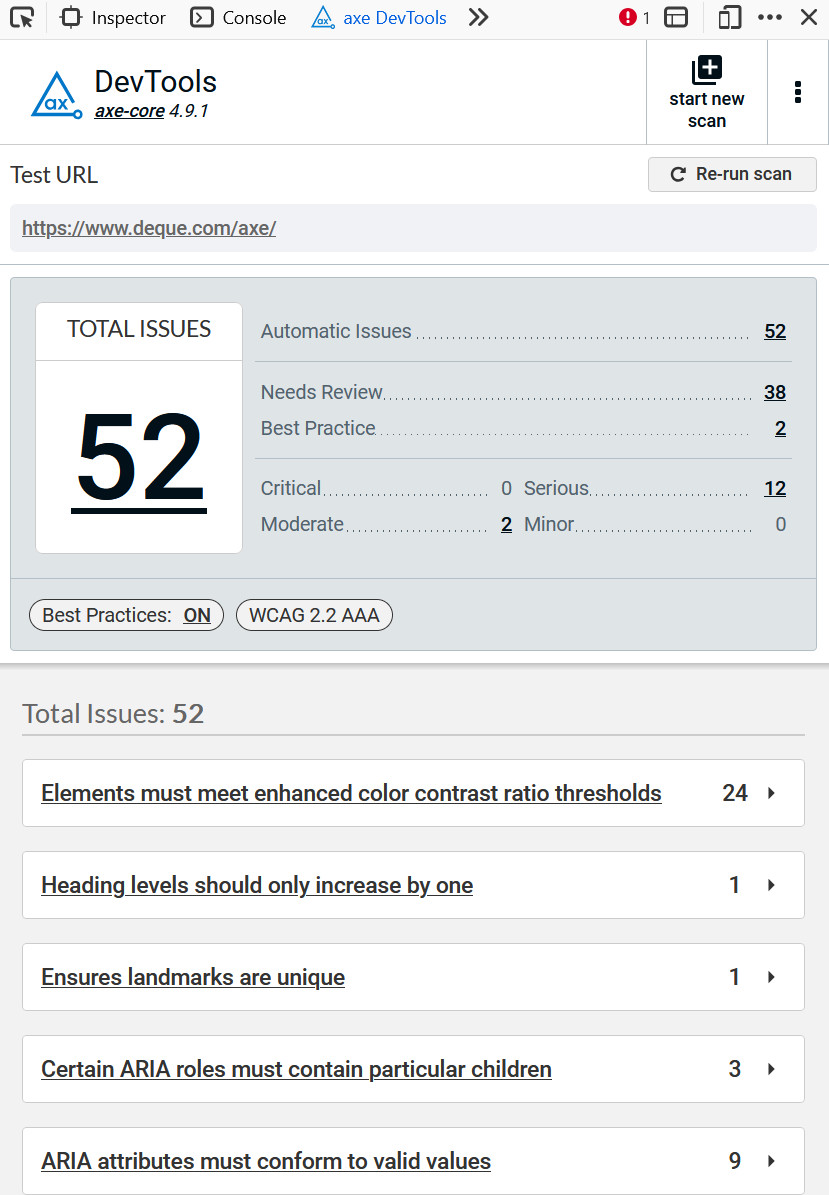

Axe Accessibility Testing Tools

Axe has a selection of ways to use their tools to test your websites, ranging from a browser plugin to command line tools that you can implement in a headless browser as part of your continuous integration strategy if you wish. This tests more closely against the Web Consortium Accessibility Guidelines (WCAG), and also tests for some of the more strict AAA guidelines too. Running these from a browser is a one-click affair, and procudes a concise report:

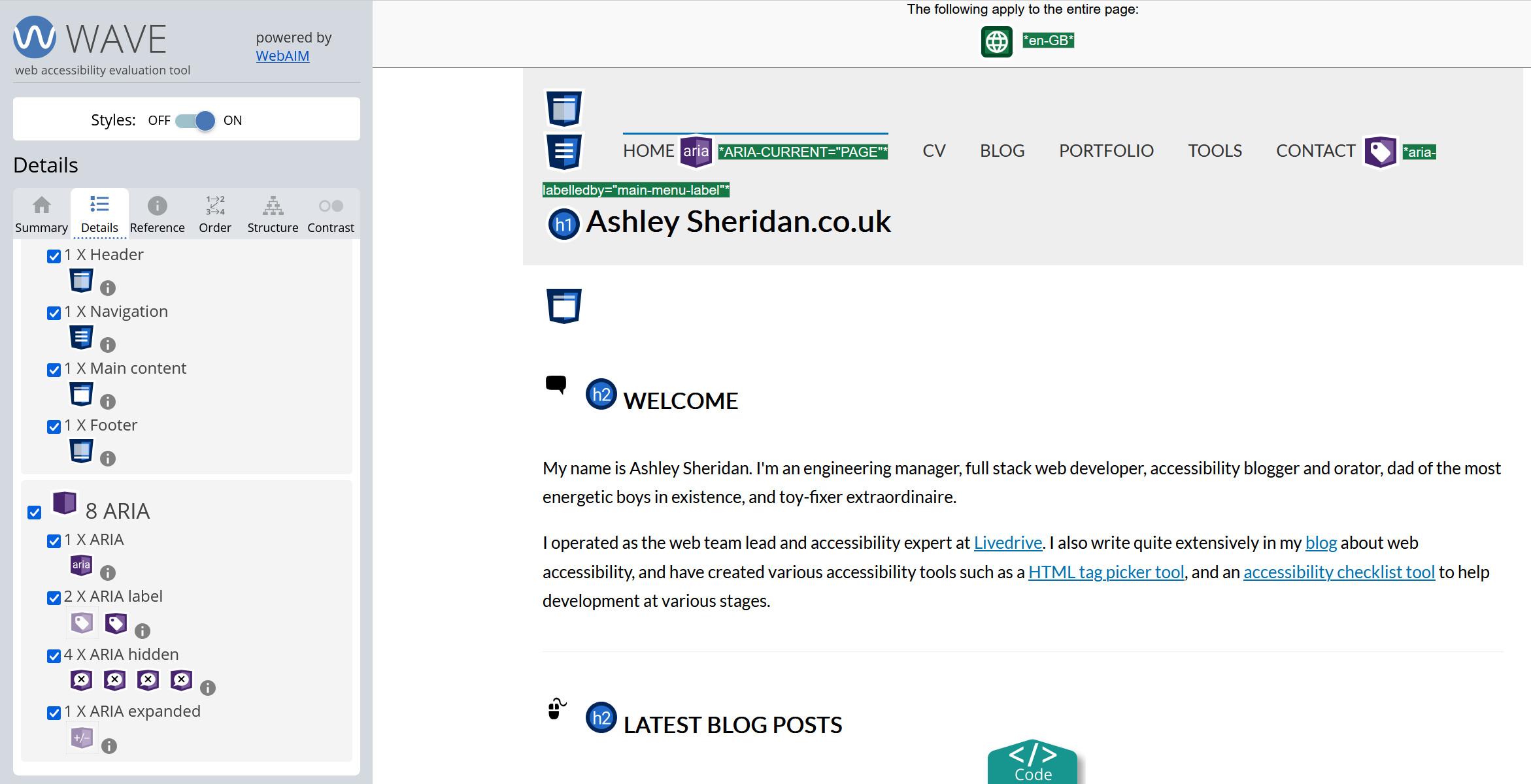

Wave Acccessibility Evaluation Tools

Like the Axe tools, Wave offers a browser plugin and command line tools for a more automated approach. The browser plugin is the fastest of all the browser tools shown here, beating even Firefox's built-in dev tools. As well as checking for errors, this tool also highlights key parts of the page, such as headings, landmark areas, and elements that have `aria` attributes that might alter how they're presented in screen readers and similar tech. These are potential areas to focus more manual checks on, as the behaviour may be correct, but it's difficult to guarantee in an automated test that it is doing what you want. has handy tabs to quickly outline the document structure and tabbing order.

The tool also has handy tabs to quickly show the document structure (which will largely match the accessibility tree), and also the tabbing order of interactive elements on the page.

Manual Testing

Manual testing should be used alongside your automated approach, but it is an absolutely essential part of performing an accessibility audit. Manual tests can find many issues that are impossible with automated tests, just like the typical QA process. Unlike traditional QA testing though, accessibility testing is heavily skewed towards the manual method because there are too many issues which need a human touch. The kinds of issues that are suited for manual testing include:

- Appropriate `alt` text for an image, it should be an alternative replacement, which may or may not be a description of the image. Incidentally, this is why AI is not suited to writing `alt` text for images,as AI often fails to understand the context of the image.

- Colour used on elements to infer meaning, such as colour dots on a list of messages to denote importance, or only red borders to indicate a form element is in an error state.

- Appropriate audio descriptions and captions for videos. As I've written about before, AI is pretty terrible at generating text captions if the voice has an accent for which it's not suitable trained. Audio descriptions also need to highlight key parts of what is happening in the video, in order to supplement captions, and AI is not brilliant yet at inferring context correctly.

- Keyboard navigation, to ensure that all areas of the site can be reached without a mouse or other pointer device. Some of your users may be permanently unable to use a mouse, and others might have a temporary injury or ailment which means they are limited to a keyboard. Also, don't forget that some people may just prefer a keyboard, especially when filling out lengthy forms, and moving back and forth between keyboard and mouse will not endear them to your website.

- Screen reader compatibility. As I've mentioned, different combinations of browser and screen reader behave in different ways. It's the same situation with browsers that we've had for decades, but multiplied.

- Form labels and placeholders. Too often a design for a website uses the placeholder instead of a label, which means it is hidden when a user moves into the field: bad for some people with cognitive issues.

- Animations should honor the users preferences. Some animations can cause severe reactions for those with vestibular problems, so they typically opt to disable them at the operating system level. Some types of animation and movement can only be detected easily by human testing.

As with any kind of manual testing, you need to make the decision about how far you test. It may not be feasible for a very small team or individual to test every possible screen reader and browser combination. If possible, test with the most popular screen readers. At the time of writing, NVDA and JAWS account for 78.2% of the screen reader usage:

Analysing and Prioritising Issues

Once you've got your list of issues, it's time to prioritise them for getting them resolved.

In the example Accessibility Audit Template I've included columns to indicate the discovery likelihood (e.g. how likely will it be for someone to encounter the problem), the consequences of the issue (i.e. an unusable site, or a modal that can't be closed with the escape key), and then the overall severity. The severity column should give a fairly good indication as to what should be the most important issues, but it's not just about that, so I've included one more column to give an estimate of effort to resolve the accessibility issue. Some of these columns can be filled out later, and both the testers and developers (if these are seperate groups) should be involved to help clarify an order of resolution for each issue found.

From there, you can plan out the work for fixing everything and either dedicate specific time, e.g. a sprint or two, to getting the issues worked on, or try to incorporate the fixes in amongst other work priorities. If the latter, set a firm plan for when the entire list should be addressed. If nothing is being fixed the audit is worthless.

Implementing Fixes and Re-testing

When resolving each issue, it's important to avoid scope creep as much as possible, because that way lies unintended side-effects. Ideally, the process for resolving the issues should follow your normal bug process, which might be something like this:

- Bug ticket raised

- Ticket assigned out to developer

- Dev work done and placed in bug branch

- Code review and testing on branch

- Code review/testing failed, go back to step 3

- PR passed and testing passed, continue

- Code merged, follow standard release procedure

Testing the issue should follow the same steps that were performed to actually discover the bug. For example, if an issue was discovered with a form element not having a visible label, then the re-test need only test it again on a single browser. However, a problem with a modal not closing when the escape key was pressed may need testing across a few browsers, as some browsers handle keyboard events in different ways.

If your issue was discovered by an automated test, then try to get that check into your automation pipeline. You could use Git hooks for this, or use the options available to you in your repository of choice, be it GitHub, BitBucket, or something else. This is important to prevent this issue from creeping back into your code.

Finally, keep the audit, and when you next perform one, you can track how well you are preventing new accessibility problems from entering your code.

Conclusion

Accessibility audits are important to gain an understanding about the state of your websites. If you know where you are, you know where you need to go.

But, the effort should not be a one time thing, or even a rare periodic thing. It should be part of your design, content, development, and QA process. While it might seem difficult and time consuming at first, by incorporating small efforts incrementally, you will find it becomes far easier and just a natural part of the process. This is good, because it reduces the costs and overheads of the work.

Start an accessibility audit now, and find out the state of your website.

Comments